The Problem

We all know the classic chicken-egg problem. You have no chicken, there is no egg, and so on.

In the context of Kubernetes clusters, we face a similar dependency challenge: I need working DNS to mesh multiple K8s clusters with Cilium cluster mesh. While manually creating DNS records when service type LoadBalancers are created by the Cilium cluster mesh API server might work for 3, 5, maybe 20 clusters, this approach becomes tedious and impractical when your mesh includes a few hundred clusters.

Why Mesh Clusters?

Before we get to the solution I have implemented to achieve DNS automation, I want to cover why meshing clusters is valuable. There are multiple use cases for connecting clusters together:

- Shared Services: In our use case, we are primarily interested in hosting a service in one centralized cluster and sharing that service with all clusters in the mesh. This eliminates the need for redeploying the same service in multiple clusters.Examples include services like Vault for sharing secrets, or your logging and monitoring stack.

- High Availability: Meshed clusters provide highly available and fault-tolerant environments ideal for production workloads.

For this implementation, we have decided to use Cilium cluster mesh as our solution.

Where Does DNS Fit In?

Cilium can mesh clusters using different methods. One straightforward approach is to issue ciliumclustermesh commands to join clusters, which might work for small deployments but is not ideal for tens or hundreds of clusters.

The best way to automate deploying Cilium cluster mesh is by using Helm. Here's an example values file (covering just the mesh part):

clustermesh:

config:

enabled: true

domain: mesh.example.com

clusters:

- name: cluster1

port: 2379

address: cluster1.mesh.example.com

- name: cluster2

port: 2379

address: cluster2.mesh.example.com

- name: cluster3

port: 2379

address: cluster3.mesh.example.com

...

You would add all the clusters that you want to mesh and install Cilium with Helm. There are many other options that I have not included for the simplicity of this article.

However, clusters can be operational but cannot form a mesh without proper DNS resolution!

Why Not External-DNS?

You might wonder why we don't use external-DNS. While it's a viable option, it defeats our goal of not duplicating services in every cluster in the mesh. This approach becomes especially problematic when dealing with clusters where you don't want to expose your secrets.

The Proposed Solution

Now that we've covered the motivation, let's detail the automated solution!

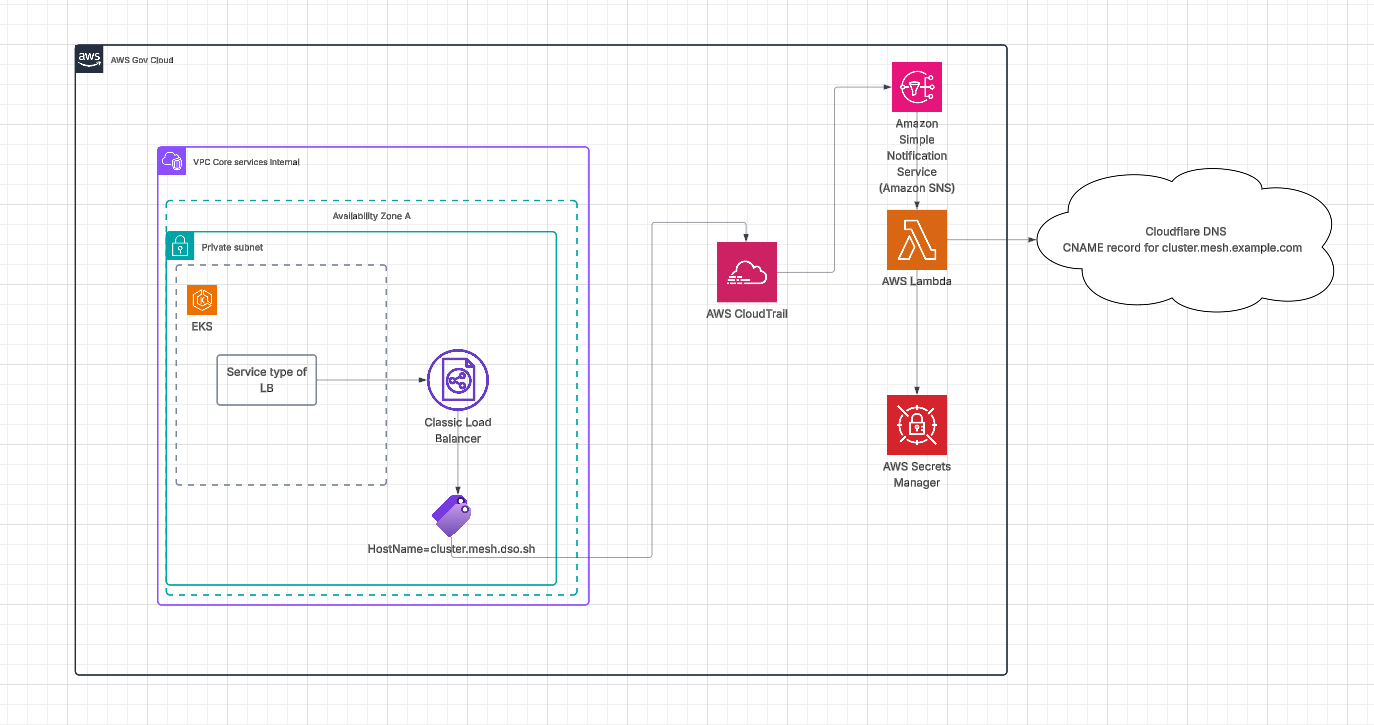

I want the solution to be automated and operate outside of the clusters so they can join the mesh. This eliminates the need for duplicating services across all clusters and avoids sharing secrets among all clusters.

When the Cilium cluster mesh API server creates a service of type LoadBalancer, this creates an internal load balancer in AWS. It's straightforward to add an annotation to the service to tag the load balancer, something like hostname=cluster1.mesh.example.com.

The solution works as follows:

- CloudTrail watches for specific events related to the creation, modification, or deletion of hostname tags on load balancers, as well as the creation and deletion events of the load balancers themselves.

- An SNS topic gets notified on these events, which in turn triggers a Lambda function.

- The Lambda function makes API calls to the DNS provider (for example, Cloudflare).

This simple solution works outside of the cluster. You can store and retrieve your secrets in AWS Secrets Manager or Vault, and your clusters don't need access to this information.